26 Understanding the experimental design

An essential step in developing a replication is to understand the original study design. That understanding allows you to assess the internal validity of the experiment: do the measures and treatments test the hypotheses, and is the treatment responsible for changes in the dependent variable?

Questions to ask when reviewing the design include:

- Who were the participants? Why were they chosen?

- What information were participants given?

- What was the process by which the experiment was run?

- What were the treatment and control conditions?

- What incentives were offered to participants?

- What environment was used (e.g. online or lab)?

Once you can answer those questions, you are in a position to decide whether the design tests the hypothesis and whether alternative explanations are ruled out. Additional questions about validity arise when examining how the experiment and data analysis were conducted.

26.1 Class example

Study 3b of (Dietvorst et al., 2015) was an online survey completed by Amazon Mechanical Turk participants

Participants:The authors recruited participants from Amazon Mechanical Turk. The participants were an average of 34 years old and were 53% female.

Information: Participants were asked to predict the rank of US states by the number of airline passengers who departed from each state in 2011. They were told that they would receive a prediction from “a statistical model developed by experienced transportation analysts” based on 2006–2010 data. They are also told, “This is a sophisticated model, put together by thoughtful analysts.”

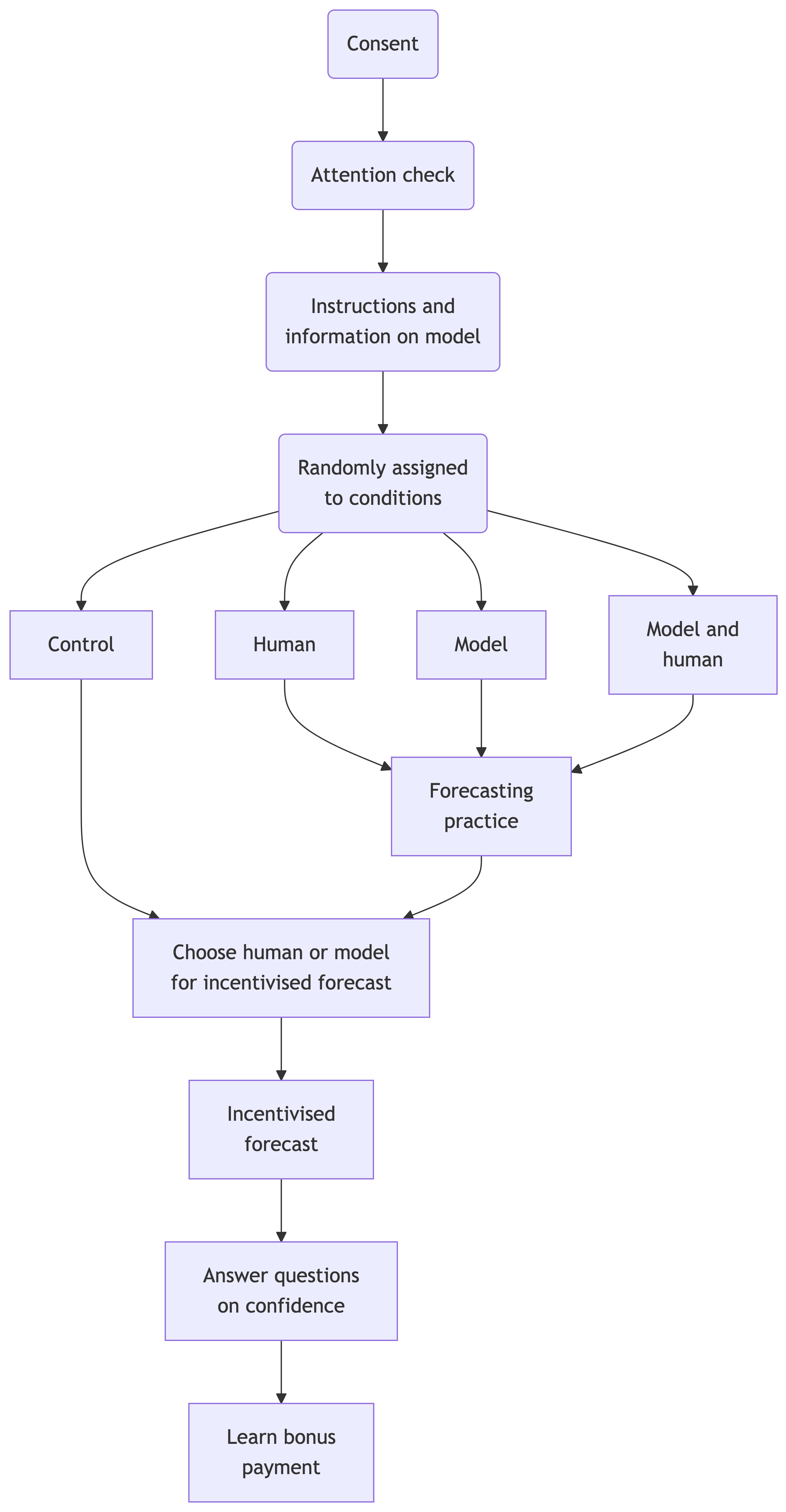

Process: After consent and instructions, participants were allocated into one of four conditions: three treatment conditions and a control. Participants in each treatment condition completed 10 practice forecasts with feedback. All participants—including the control—were then asked whether they wanted to use their own or the model’s forecasts for one incentivised forecast. Regardless of the choice, they still entered their own forecast and were asked about their confidence in their forecast and the model’s forecast before learning their bonus.

Conditions: There were four experimental conditions, with the difference in conditions occurring in the first stage of the experiment. For each of the 10 practice forecasts, they would gain experience as follows.

- Model: Participants saw the model’s prediction and the true rank.

- Human: Participants made a forecast and then saw their own prediction and the true rank.

- Model and human: Participants made a forecast and then saw their prediction, the model’s prediction and the true rank.

- Control: No practice forecasts.

Incentives: Participants were paid a $1 show-up fee and could earn up to $1 for accurate forecasting. They were paid $1 for a perfect forecast, with the bonus decreasing by $0.15 for each unit of error in their estimate.